Last week, OpenAI introduced Sora, a generative video engine that produces high-quality 60-second videos. The reactions echoed the amazement over DALL-E and Midjourney images, a bit over a year ago. Soon enough, these videos will be as omnipresent, look as obvious, feel as cheap, and still seem as eery as AI images.

Apparently, “It will change everything.” In a good way or like AI images? Remember when you saw the first DALL-E and Midjourney pics? Yes, they are everywhere now. Are you still as impressed as you were a year ago? Do you expect a different outcome for AI videos?

It is going to be different this time? Sure, technically, crisp, well-lit, high-definition videos require big budgets. They take time. They need rare expert teams in the creation process. They feel expensive, and they are expensive. The outlook of creating technically high-quality videos in just a few seconds seems very attractive. Great, meaningful pictures are not exactly cheap and easy either.

Now, foreseeably, the same inflation that has hit computer-generated images will hit AI video. They will quickly beat the Pet Shop Boys at being boring.1 And then they will make those who use them look cheap, lazy, and gloomy. They will not enrich but undermine and devalue the brands and content linked to them. 2

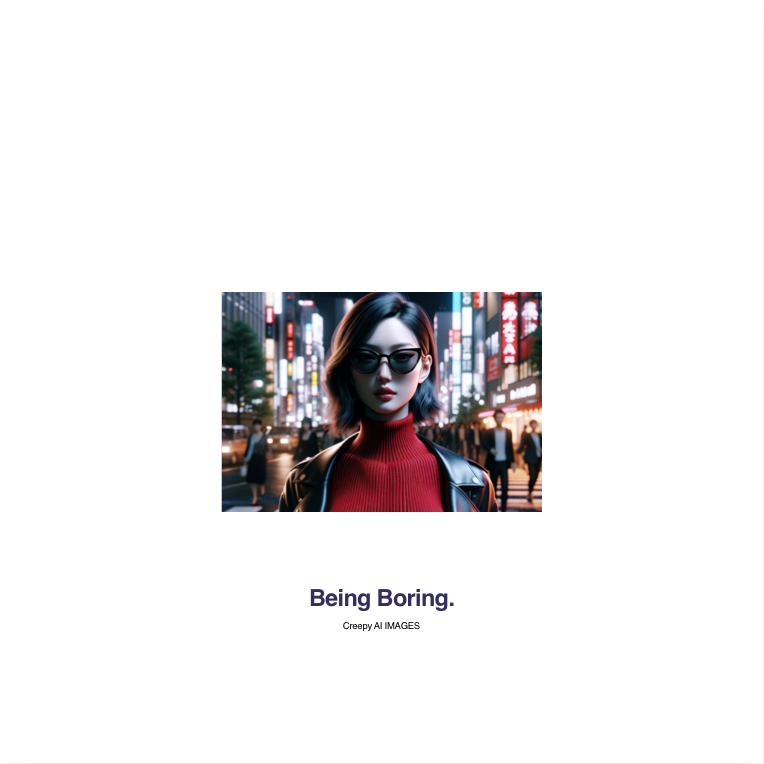

Recognizably Creepy

By now, most of us recognize computer-generated images. The effect they have on us is the opposite of Ah and Oh. They’re both creepy and boring at the same time. It’s a weird combination, and it’s even weirder how creepy, recognizable, and boring feed into each other.

- They are recognizable because they are creepy

- They are creepy because they are neither meant or felt

- They are neither meant nor felt because they are made without body or mind

Computer-generated imagery is not bad in its every fiber. There certainly are great ways to use AI in a creative process. The more you control your tool and the process, the more mindful the product, the less it qualifies as “AI”. This article is not about the complex, controlled, professional use of AI imagery and videos. It’s about the generally praised, advertised, common, lazy use3 of it.

Rather than a practical way to easily add a good image to our articles, using lazy AI imagery devalues our texts and makes us look cheap, dull, lazy, and a bit creepy. How come? Maybe it has to do with our knowledge of how they are made:

- We know that it doesn’t take much time and energy to create them.

- We know, feel, and recognize the type of errors that they come with.

- We know that they are not meaningful by themselves, but recycled visual data that we fill with meaning.

You may get lucky and the computer produces an image, text, sound, or video that enriches your message. Or you may get unlucky and the computer produces some sexist, racist, or otherwise offensive, stupid, destructive, degrading message that shows you in an unintended, bad light.

“This is the Black Hole Sun music video”

We can recognize their faultiness just as well as the fake dinosaurs in the original Jurassic Park movie. At first, AI images seemed fresh and new, and somewhat uncanny. If you took a time machine to travel back thirty years, with a couple of AI images on a floppy disk, your 90’s friends would be stunned. Likely you could sell them for good money. Aphex Twin would be your first customer. You could make a really good deal selling AI-generated videos to Soundgarden.4

In 1994 the American Rock Group Soundgarden released a surreal, dark song called Black Hole Sun.5 The lyrics can be interpreted in various ways. Some say they are about nuclear war. Some think Black Hole Sun is about taking heroin. Today, one could argue that it’s about AI images. Their author explains his lyrics as follows: “They’re just words.”

“I wrote it in my head driving home from Bear Creek Studio in Woodinville, a 35–40 minute drive from Seattle. It sparked from something a news anchor said on TV and I heard wrong. I heard ‘blah blah blah black hole sun blah blah blah’. I thought that would make an amazing song title, but what would it sound like?” –Chris Cornell, cit. in Songfacts

Examined from close, an academic might judge them as dark, surreal rock kitsch. The lyrics and their history could be bent into a prophecy on AI-generated content. What fully resonates without much bending are the aesthetics of the video.

The video looks just like today’s computer-generated content. It’s oversaturated, deformed, and scary in its lack of humanity and plausibility. It mixes the sweet and the brutal and shows scene after scene without any consideration for story or meaning. According to Howard Greenhalgh, the director of the video, it somewhat mimics the aesthetics of the opening scene of David Lynch’s Blue Velvet. All in all, it was designed to come across as “******* psychotic”. Greenhalgh called it a “horror cartoon”.

As boring as AI imagery has become, it is still as creepy as it was. One can only speculate why. Is it because we are trained to recognize fakeness, sickness, and death in human appearance? Or is it just not good enough just yet? The reason may have as much to do with the aesthetic as with our knowledge that humans didn’t create these images and videos.

Soundgarden’s video effects were also computer-generated. The difference is that their distortions were made with intention. AI-generated content is as it is because it lacks intention. Computer-generated images lack meaning, they lack emotion, and visually we can recognize that. AI images feel like a bad dream, a bad trip, a bad life, where everything looks real but nothing has any meaning. AI images show us things we somehow know but they feel empty and threaten to drag us in a black hole sun devoid of all sense. The more plausible the look, the scarier their emptiness feels.

Visual AI content will continue to creep us out, no matter how good the simulation becomes. The lack of emotion, intention, meaning, and story, the proximity of the cute and the infernal, the death in AI-generated eyes—all of that is not softened by its increasing verisimilitude. The horror becomes crisper with the growth in pixel density and technical perfection in sound, light, and physics. The crisper they become the clearer the psychosis they portray.

Soundgarden’s video has a cute lamb for contrast. Its cuteness makes the rest of the video even more creepy. Sora’s puppies look cute. Slow motion helps hide their fakeness. But in context, they have a similar effect as the lamb in Soundgarden’s famous video, they add sugar to the psychotic horror so it more easily enters the bloodstream…

Cute puppies are a good showcase because they have no facial expressions. We have a very sensitive recognition apparatus interpreting human motion and faces. It’s so hard to simulate human appearances. Cute puppies in slow motion are the opposite: We just have to like them.

No Feeling, No Meaning

“AI will get better and soon we can’t tell anymore.”

Technology gets better over time. So what? Chess computers have gotten so good at chess that we cannot tell who plays anymore. And yet the difference matters as much as ever. As soon as we know that a computer plays a chess game loses its meaning and becomes a technical exercise. Computers are good for training, but they haven’t made the game more interesting.

Computer-generated movies may get technically perfect. Maybe, one day, the FBI can’t tell the difference between Sora and Stanley Kubrick. But as soon as we’d know, the emptiness of its reality would suck us right back into its black hole. Just like computer chess. Why should I care on this side when no one cares on the other side?

“Everybody Makes Mistakes”

It’s not an issue that “AI” makes mistakes. We also make mistakes. The issue is what types of mistakes computers make. As soon as we notice that a visual mistake is not a sign of humanity but of its absence, something funny happens. Realizing “This is AI” is like finding out that we play chess against a computer. The game loses its purpose.

We make mistakes out of a partial deficiency of understanding or concentration. Processing errors often show their profound lack of understanding of basic reality. Let’s examine the mechanics of creep by looking at some of the highly praised Soras videos.

How Can AI Video Look So Good But Feel So Weird?

The Portuguese Dog

It looks colorful at first, but soon the story makes us worry about the dog. ‘It can’t possibly walk by the shutter without falling,’ we think. Somehow it walks across, easy. Reaching the other window it looks like wants to do another even more impossible jump. Luckily, the video ends before the inevitable fall. A cute dog in a world of beautiful colors turns into a scary story.

The Woman in Tokyo

It looks plausible and fashionable at first. But then we notice the zebra lines on the floor, and how they point to the middle of the street. Then we see the chopped-up arrows and made-up Japanese writing on the signs. At this point, her slowly aging face with the dead eye behind the glasses starts giving us a weird vibe. Her constantly advancing age changes the story every three seconds. You may not notice (or have noticed) the aging, but you felt that something was off.

The Gold Rush

At some point, you will notice that all the half-horses and horse ghosts appear and disappear. And as you realize that it’s fake and unintentionally so. By the end of the clip, it starts looking like the opening of a Cowboy Zombie movie.

These details almost seem irrelevant in light of the great technical quality, but they matter for our emotional perception. Details bring the story to life. When horses dissolve the story changes from Gold Rush to The Walking Dead.

Puppie Monster

You may agree or disagree with the previous examples or say that details don’t matter when you get such great image quality. Let’s look at a more objective example:

Once again, we are watching a bunch of cute puppies play. Cute. But then we get irritated. ‘Wait, how many are there? Three, four, five, ten?’ What started as a cute play of puppies turns into a horror show about an undefined fur monster that used its cuteness to make us watch it turn into something ghostly.

We may not consciously notice the transformation, or we may even justify it somehow. “It was probably just hiding behind the other…” But we feel that something is wrong. Movies that completely change the story as they progress without any conceivable reason scare us, especially when they combine the cute with the ghostly.

Meaning and Creep

Knowledge and Meaning

Computers don’t know what they do. They have no comprehension or experience with the world that our languages, visual or verbal describe. They sustain no inner or outer relationship to the signs that they are merely ordered to statistically process. Thoughtlessly reusing computer-generated information you risk being judged accordingly, as someone who either lies or doesn’t know at all what they are saying or doing.

Knowing that information wasn’t meant but generated has the same effect on text, audio, and video as knowing that a chess move wasn’t thought but calculated. The difference in meaning between AI and humans is real. The result of 2*2 found by a pocket calculator or calculated in a human mind may numerically be the same. But it has a different meaning when we know that someone has felt the number 2 becoming the number 4.

We don’t need to see AI’s usual faults—like hands with six fingers, objects that don’t follow the law of physics—anymore to lose interest. We lose interest as soon as we realize that what we read never had any intended meaning. This is our natural reaction to lies and bullshit.

Meaning And Fiction

The value of content lies in what it means. If it wasn’t meant to express anything it simply doesn’t have the meaning of what you project into it. Unless you are an old-fashioned post-modernist, you know that, no matter how you interpret things, what something was intended to be, influences not just your interpretation… it matters to begin with. Expression without impression undermines meaning. Messages without meaning waste our time. We instinctively turn away from them.

We are trained to spot lies and fakery. Whether it’s a CGI Princess Leia, superman taking off, or the unlikely events in a fairytale, we have a well-developed sensory to discern reality from fiction. If the lack of reality is part of the deal we agreed upon engaging with fiction we embrace the fakeness.

But realizing that what looks real at first is “something else” hooks into a deeper fear. A fear of lies, demons, ghosts, and sickness. As soon as we know that what pretends to be emotion is emptiness, trying to manipulate us, we feel disgust and turn away. This is our natural response to lies and trickery. Excluding the phony messenger from our world can be a matter of survival.

Fiction and Psychotic Lies

It’s not just a lack of reality that creeps us out in computer-generated images. When we watch a theatre piece or watch superhero movies we trade in the verisimilitude of the visuals for a good story. We forgive the unlikeliness of visuals and action for the sake of the story. We trade reality for a good, meaningful story anytime.

The creepiness of generated content doesn’t just come from the creepy looks or the creepy narrative. We watch creepy movies, we read scary stories and we enjoy them. If horror were the deal we agreed upon engaging with a story, we expect and accept it as part of the deal.

What ultimately creeps us out is that all of this happens without any intended sense or meaning. It seems psychotic. AI acts like a psychotic liar that constantly changes the story for no apparent reason.

Negative Value

While the visual horror of computer-generated content persists, the aesthetic value is fading by the second. There are so many AI-generated images out there and they all look more or less the same. The same aesthetic devaluation will hit AI videos. Until they become a negative asset.

Computer-generated images are quick and easy to make. Their quality is a lot better now than a year ago. And they still do look fun… at first glance. In the meantime, they have started replacing stock images at a fast pace. Like everything cheap and easy, they are losing their creative and economic value at the same pace as they have become ubiquitous. If they are easily recognizable, they now have to be seen as a liability.

Compared to traditional video creation, generative video production will cost close to nothing and they will be produced at such an inflationary number that the technical glitz they now seem to offer will grow old within months.

The very same economic inflation that hit computer-generated chess already applies to computer-generated text and computer-generated images. And this is exactly what will happen to computer-generated movies in no time. They will weigh on your credibility.

Conclusion

Computer-generated videos impress at first sight. They will follow the trajectory of computer-generated text and images and become increasingly recognizable, emotionally, aesthetically, and logically.

Through their inflationary use, they will carry close to zero stylistic, economic, or creative value. At some point, they become a liability and will cost more than they add, as they drag everything around them down.

You can use AI as a tool and try to control it, rather than just lazily let it do as it pleases. We do not have much reason to be too optimistic about its controlled use. Commonly, AI is neither used nor designed nor advertised to be used with human intelligence. It is conceived and sold to make us act without thinking.

Used mindlessly, computer-generated imagery will make the typical aesthetic ubiquitous and worthless. Adapting that aesthetic will make everything you do look cheap, careless, and dishonest. Instead of impressing people with nice visuals, you risk creeping them out. Whatever is cheap, fast, and omnipresent, doesn’t add any artistic, aesthetic, or economic value. On the contrary.

-

If you are into pop culture you cannot be seriously offended when you read that the Pet Shop Boys are boring. Ironically, Being Boring was composed in response to Neil Tennant’s feeling hurt after a Japanese reviewer casually wrote that his band was generally considered uninteresting. “My feelings weren’t hurt. After [Pet Shop Boys co-founder] Chris Lowe and I performed at Tokyo’s Budokan arena in early July 1989, a Japanese reviewer wrote, “The Pet Shop Boys are often accused of being boring.” Being Boring – The path to a pop elegy. So he went and wrote a song that they were never being boring. If you still feel offended, you may unironically lick your wounds on The Guardian that will teach you “Why Pet Shop Boys’ Being Boring is the perfect pop song.” It’s still boring though. ↩

-

There are countless examples of smaller blogs that now use AI imagery to illustrate their articles. What seems like a step up is indeed a step down. At least, finding a stock image required some searching and cringing over which of the cliche images one should pick. AI images say more or less just: ‘I know Midjourney, up to you to figure out what to see in this Rorschach test.’ ↩

-

The executives of Red Ventures, who bought CNET for 500 Million Dollars, “…have touted the power of AI with a near-fanatical zeal. ‘From here on out,’ CEO Ric Elias told company employees in a July 2023 all-hands meeting that Futurism obtained audio of, ‘we are going to become AI.'” The result, a couple of months later: “CNET and Bankrate had both ‘paused’ their AI efforts and issued extensive corrections. But the damage was already done, at least in the Wikipedia editors’ eyes. By mid-February, the editors had concluded that anything published by CNET after its 2020 sale to Red Ventures could no longer be considered ‘generally reliable,’ and thus should be taken with a hefty grain of salt.” Wikipedia No Longer Considers CNET a “Generally Reliable” Source After AI Scandal Lazy use of AI to generate text can literally set the house on fire. ↩

-

“While shooting ‘Black Hole Sun,’ he instructed his actors to do one thing: ‘Look basically fucking psychotic[…] The only stipulation the band’s members made was that, unlike the rest of the cast, they didn’t want to be wearing stupid grins. Instead, they played it straight-faced in front of a blue screen. The suggestion saved Greenhalgh some money—all the other actors’ exaggerated smiles needed to be digitally enhanced, and back then even primitive computer-generated effects were expensive. ‘Thank God Chris said he didn’t want to smile in it,’ the director said. ‘Because that would’ve made the postproduction budget massive.'” Source: “Look F***king Psychotic”: The Enduring Mystery of Soundgarden’s “Black Hole Sun” ↩

-

The hint for the analogy of Sora’s birthday cake video and Black Hole Sun came from a Threads post of NYT reporter Mike Isaac. ↩